Laurie Rosenow

In 2017 an Illinois mother of two children diagnosed with Autism Spectrum Disorder (ASD) filed a complaint against a sperm bank alleging that the sperm donor used to conceive both of the children was not the man he claimed to be.[i] Not only did Danielle Rizzo learn that donor H898 lied about his education, but he had failed to disclose a history of learning disabilities and other developmental issues.[ii] Ms. Rizzo later discovered that she was not alone. To date at least a dozen other children conceived with donor H898’s sperm have been diagnosed with Autism Spectrum Disorder.[iii]

In 2010 Ms. Rizzo purchased donor H898’s sperm from Idant Laboratories, who listed the donor as a 6’1 blonde-haired, blue-eyed college graduate with a master’s degree that had passed all of the lab’s health screenings.[iv] The only thing that turned out to be true was his appearance. Based on conversations with other women who had used donor H898 to conceive their children, some of whom had even met him, Rizzo learned that the donor had neither an undergraduate nor graduate degree as advertised and was diagnosed with ADHD, did not speak until age 3, and attended a special school for children with learning and emotional disabilities.[v]

When Rizzo’s children were 3 and 4 years old, she contacted geneticist and autism researcher Stephen Scherer, Director, Centre for Applied Genomics at The Hospital for Sick Children in Toronto and connected him with other families who had affected children from donor H898. This group, known as an autism “cluster” offer a rare opportunity for scientist to study what causes and how to treat the disease. Dr. Scherer cautioned that while his research to date is still preliminary, his hypothesis is that something in the donor’s DNA caused the children to develop ASD.[vi]

The word “autism” is derived from the Greek root for “self” and describes a wide range of interpersonal behaviors that include impaired communication and social interaction, repetitive behaviors, and limited interests. These can be associated with psychiatric, neurological, physical, as well as intellectual disabilities that range from mild to severe.[vii]

Such a person may often appear removed from social interaction becoming an “isolated self.”[viii] The Diagnostic and Statistical Manual of Mental Disorders (DSM-5) uses a broad definition of “autism spectrum disorder” that includes what were once distinct diagnostic disorders such as autistic disorder and Asperger syndrome.[ix] ASD affects four times more males than females and symptoms usually manifest by the age of three.[x]\

The March of Dimes estimates that 6% of children born worldwide each year will have a serious birth defect that has a genetic basis.[xi] The occurrence of ASD varies but is thought to be as high as 1% of the population.[xii] Unlike diseases such as Cystic Fibrosis or Tay Sachs Disease for which carrier testing exists, the genetics of ASD is not yet well understood. Geneticists such as Dr. Scherer suspect as many as 100 different genes may be associated with ASD. Over 100 genetic disorders can exhibit features of ASD such as Rett Syndrome and Fragile X Syndrome, further complicating the diagnosis and understanding of ASD. Dr. Scherer estimates that a subset of “high- impact” genes are involved in 5-20% of all ASD Diagnosis.[xiii] Danielle Rizzo’s children were found by Dr. Scherer to carry two mutations associated with ASD.[xiv]

Despite the fact that genetic screening is available for many diseases, the United States does not require any genetic screening for gamete donors. Under federal law, sperm banks in the United States are regulated by the Food and Drug Administration which requires donors of reproductive cells or tissue to undergo testing for certain enumerated communicable diseases such as HIV, Hepatitis B and C, chlamydia, and gonorrhea.[xv] “Sexually intimate partners,” however, are exempted from such screening.[xvi] The FDA also requires that an establishment that conducts donor screening must also review the donor’s medical records and social behavior for increased risk for communicable disease and conduct a physical exam of the donor.[xvii] Retesting of donors is required after six months for any subsequent donations.[xviii]

Sperm banks in the U.S. are also not required to limit the number of semen samples sold or to keep track of live births resulting from their donors. And no law prohibits a man from donating to as many sperm banks as he likes. For example, a donor in Michigan who donated his semen twice a week between 1980 and 1994 had fathered at least 400 children by 2010.[xix] A mother of a donor child was able to trace at least 150 half-siblings to her son using web-based registries.[xx] Danielle Rizzo discovered that her donor, H898, was still being sold by at least four sperm banks, despite receiving calls and letters warning them of her experience.[xxi] With the popularity of DNA home testing kits such as 23andMe and Ancestry.com as well as voluntary donor registries such as Donor Sibling Registry, even more children from donors like H898 are likely to be discovered.

In addition to the FDA rules mandating screening for communicable disease, the American Society for Reproductive Medicine (“ASRM”) advises sperm banks only include donors who are between the ages of 18-40 and provide a psychological evaluation and counseling to prospective donors performed by a mental health professional. [xxii] The industry group recommends genetic testing for cystic fibrosis of all donors and other genetic testing that is indicated by the donor’s ethnic background. The group does not recommend a chromosomal analysis of all donors.

The American College of Obstetricians and Gynecologists (“ACOG”) as well as ASRM recommend limiting the number of children born to a single gamete donor. [xxiii] While populations will vary, to limit the possibility of consanguinity, AGOG recommends a maximum of 25 children born from a single donor per population of 800,000.[xxiv] The challenge in setting limits on the number of children born to a sperm donor lies in obtaining the information and keeping updated records. Many women purchase sperm from banks across the country and even the globe with no legal incentive to inform a sperm bank of any resulting children or their health status. Sperm banks are also unlikely to share information with donors regarding the number of their semen vials sold let alone any children that result.

Despite the lack of a federal mandate, most sperm banks voluntarily screen for genetic defects.[xxv] However, like ASD, many diseases that are thought to have a hereditary component cannot be tested for and clinicians and patients are forced to rely on the donor to give truthful and accurate medical and family histories as well as the banks to accurately document such information.

Like Danielle Rizzo, another mother of two children diagnosed with ASD conceived with donor H898’s sperm filed a lawsuit against Idant Labs including claims for fraud, negligent misrepresentation, strict products liability, false advertising, deceptive business practices, battery, and negligence.[xxvi] Danielle Rizzo settled her claims against Idant’s parent company Daxor Co. in 2017 for $250,000 though she alleges it is a fraction of the estimated $7 million in care that will be needed for both of her children.[xxvii]

Similar lawsuits were filed against Xytex, a sperm bank based in Atlanta, Georgia, regarding sperm it sold from Donor #9623 named Chris Aggeles who was advertised as having a genius level IQ of 160 pursing a PhD in neuroscience engineering.[xxviii] In fact, the donor at the time was a high school drop out with a history of mental disorders including schizophrenia, bipolar disorder, and narcissistic personality disorder and a criminal record.[xxix] He had been a donor at Xytex for fourteen years. The plaintiffs claimed the company did not verify any of the information the donor had given them but Xytex claims it discloses to prospective clients that any representations by the donor were his alone.[xxx] Recently nine families with 13 children conceived with sperm from Aggeles settled their claims for wrongful birth, failure to investigate, and fraud.[xxxi]

Despite monetary damages awarded in settlement of these lawsuits, a case filed in the Third Circuit against Idant was dismissed because the court found the argument of liability based on quality of sperm to be indistinguishable from New York’s prohibition against wrongful life claims.[xxxii] “The difficulty B.D. now faces and will face are surely tragic, but New York law, which controls here, states that she ‘like any other [child], does not have a protected right to be born free of genetic defects.’” Idant in this case had sold the sperm of Donor G738 to a mother in Pennsylvania whose daughter was diagnosed as a Fragile X carrier.

Both mothers of the children diagnosed with ASD from donor H898’s sperm left professional careers to care for their children and alleged severe financial losses as a result.[xxxiii] Donor H898, however, was not a party to the suits nor were any other establishments selling vials of his semen and therefore not bound to any settlement agreements reached which might restrict future donations. Since lawsuits only offer the possibility of damages and other relief after an injury has occurred and some jurisdictions, like New York, will not even consider claims related to defective sperm, policies that focus on avoiding harm prior to insemination should be considered.

Because screening is not available for many diseases that likely have a strong genetic component, the family and medical history of donors becomes critical as a secondary method of screening. Sperm banks could require signed, sworn affidavits from donors attesting to the truthfulness and accuracy of the information they provide to encourage more accurate reporting by donors. Many banks claim they run criminal background checks on donors but they could also verify claims of employment and education with a simple phone call. Laws mandating a cap on the number of vials an individual may donate make sense in light of the vast numbers of children possibly being conceived from popular donors. It may also be time for sperm banks in the U.S. to follow the example of the UK which allows children conceived from donor gametes to obtain medical information from donors at age 16 and the full name, date of birth, and address of their donors at age 18.[xxxiv] In the age of DNA testing, social media, and cyber stalking, anonymity may be unrealistic. If sperm banks do not tighten their internal policies for screening donors, more avoidable tragedies are likely to occur.

Laurie Rosenow in an attorney and former Senior Fellow at the Institute for Science, Law & Technology.

[i] Rizzo v. Idant Labs, Case No. 17-cv-00998, N.D. Ill. Jan 31, 2017.

[ii] Id. See also, Arianna Eunjung Cha, “The Children of Donor H898,” Wash. Post, Sept. 14, 2019.

[iii] Id. See also, Doe v. Idant Labs, complaint filed NY State Supreme Court, Civil Branch, June 2016.

[iv] Cha, “The Children of Donor H898.”

[v] Id.

[vi] Id. Dr. Scherer also noted, however, that the donor could have other biological children who are not affected.

[vii] Yuen, R.K.C. et al, “Whole Genome Sequencing Resource Identifies 18 New Candidate Genes for Autism Spectrum Disorder,” 20 Nat. Neurosci., 602-611 (2017).

[viii] “What Does the Word ‘Autism’ Mean? WebMD, available at https://www.webmd.com/brain/autism/what-does-autism-mean#1.

[ix] Autism Spectrum Disorder, Diagnostic Criteria, Centers for Disease Control, available at https://www.cdc.gov/ncbddd/autism/hcp-dsm.html.

[x] Yuen et al, “Whole Genome Sequencing Resource Identifies 18 New Candidate Genes for Autism Spectrum Disorder.” For examples of common behaviors found in children with ASD, see National Institute of Mental Health. Autism Spectrum Disorder Overview, available at https://www.nimh.nih.gov/health/topics/autism-spectrum-disorders-asd/index.shtml.

[xi] March of Dimes Global Report on Birth Defects 2006, available at https://www.marchofdimes.org/global-report-on-birth-defects-the-hidden-toll-of-dying-and-disabled-children-full-report.pdf

[xii] Anney, Richard et al, “A Genome-wide Scan for Common Alleles Affecting Risk for Autism,” Hum. Mol. Gen. Vol. 19, No. 20, p. 4072-4082 (2010).

[xiii] Cha, “The Children of Donor H898.”

[xiv] The genetic mutations found in her sons were MBD1 and SHANK1, Cha, “The Children of Donor H898,” Washington Post, Sept. 14, 2019.

[xv] 21 C.F.R. Sec. 1271.75.

[xvi] 21 C.F.R. Sec. 1271.90.

[xvii] 21 C.F.R. Sec. 1271.50 (2006). See also, https://www.fda.gov/vaccines-blood-biologics/safety-availability-biologics/what-you-should-know-reproductive-tissue-donation. Donor screening consists of reviewing the donor’s relevant medical records for risk factors for, and clinical evidence of, relevant communicable disease agents and diseases. These records include a current donor medical history interview to determine medical history and relevant social behavior, a current physical examination, and treatments related to medical conditions that may suggest the donor is at increased risk for a relevant communicable disease.

[xviii] 21 C.F.R. Sec. 1271.85 (d).

[xix] Newsweek Staff, “Genetic Lessons from a Prolific Sperm Donor,” Newsweek, Dec. 15, 2009, also available at https://www.newsweek.com/genetic-lessons-prolific-sperm-donor-75467. See also, Hayes, Daniel, “9 Sperm Donors Whose Kids could Populate a Small Town,” Thought Catalog, Jan. 13, 2016, available at https://thoughtcatalog.com/daniel-hayes/2016/01/9-sperm-donors-whose-kids-could-populate-a-small-town/.

[xx] Meraz, Jacqueline, “One Sperm Donor; 150 Offspring,” New York Times, Sept. 5, 2011, also available at https://www.nytimes.com/2011/09/06/health/06donor.html.

[xxi] Cha, “The Children of Donor H898.”

[xxii] “Recommendations for Gamete and Embryo Donation,” 99 Fertility and Sterility 1, p.47-62, Jan. 2013, available at, https://www.fertstert.org/article/S0015-0282(12)02256-X/fulltext#sec1. See also, https://www.reproductivefacts.org/news-and-publications/patient-fact-sheets-and-booklets/documents/fact-sheets-and-info-booklets/third-party-reproduction-sperm-egg-and-embryo-donation-and-surrogacy/

[xxiii] ACOG Committee Opinion: Genetic Screening of Gamete Donors, Int’l Jour. Gyn & Obst. 60 (1998) 190-192, available at https://obgyn.onlinelibrary.wiley.com/doi/abs/10.1016/S0020-7292%2897%2990229-0

[xxiv] Id.

[xxv] See, e.g. California Cryobank, one the of the largest sperm banks in the United States: https://www.cryobank.com/services/genetic-counseling/donor-screening/

[xxvi] Doe v. Idant Labs, complaint filed N.Y. State Supreme Ct., June 2016, available at https://www.donorsiblingregistry.com/sites/default/files/Rizzo%20complaint.pdf.

[xxvii] Cha, Ariana Eunjung, “Danielle Rizzo’s Donor-conceived Sons Both Have Autism. Should Someone be Held Responsible?” Wash. Post, Oct. 3, 2019, available at https://www.washingtonpost.com/health/2019/10/03/danielle-rizzos-sons-donor-conceived-sons-both-have-autism-should-someone-be-held-responsible/

[xxviii] Johnson, Joe, “UGA Employee at Center of Sperm Bank Fraud,” Athens Banner-Herald, Sept. 3, 2016.

[xxix] Id. See also, Van Dusen, Christine, “A Georgia Sperm Bank, a Troubled Donor, and the Secretive Business of Babymaking,” Atlanta Magazine (March 2018), also available at https://www.atlantamagazine.com/great-reads/georgia-sperm-bank-troubled-donor-secretive-business-babymaking/ (Feb. 13, 2018).

[xxx] Djoulakian, Hasmik, “The “Outing” of Sperm Donor 9623,” Biopolitical Times, June 30, 2016, available at https://www.geneticsandsociety.org/biopolitical-times/outing-sperm-donor-9623. See also, Johnson, Joe, “UGA Employee at Center of Sperm Bank Fraud,” Athens Banner-Herald, Sept. 3, 2016.

[xxxi] Hersh & Hersh law firm, “Major Settlement of Sperm Bank/Deceptive Business Practice Case,” available at https://hershlaw.com/success-2/. See also, Khandaker, Tamara, “Lawsuit Alleges Sperm Bank’s Genius Donor Was Actually a Schizophrenic Ex-Con,” Vice News, Apr. 15 2016, available at https://www.vice.com/en_us/article/neykmx/lawsuit-alleges-sperm-banks-genius-donor-was-actually-a-schizophrenic-ex-con

[xxxii] D.D. v. Idant Labs, (3rd Cir. 2010).

[xxxiii] Doe v. Idant Labs, Complaint; Cha, “The Children of Donor H898.” See also, Cha, “Danielle Rizzo’s Donor-conceived Sons Both Have Autism.”

[xxxiv] Human Fertilitisation and Embryology Authority, “Rules Around Releasing Donor Information,” available at https://www.hfea.gov.uk/donation/donors/rules-around-releasing-donor-information/.

By Alexandra M. Franco, Esq.

By Alexandra M. Franco, Esq.

By Andrew White

By Andrew White

By Joan M. LeBow and Clayton W. Sutherland

By Joan M. LeBow and Clayton W. Sutherland

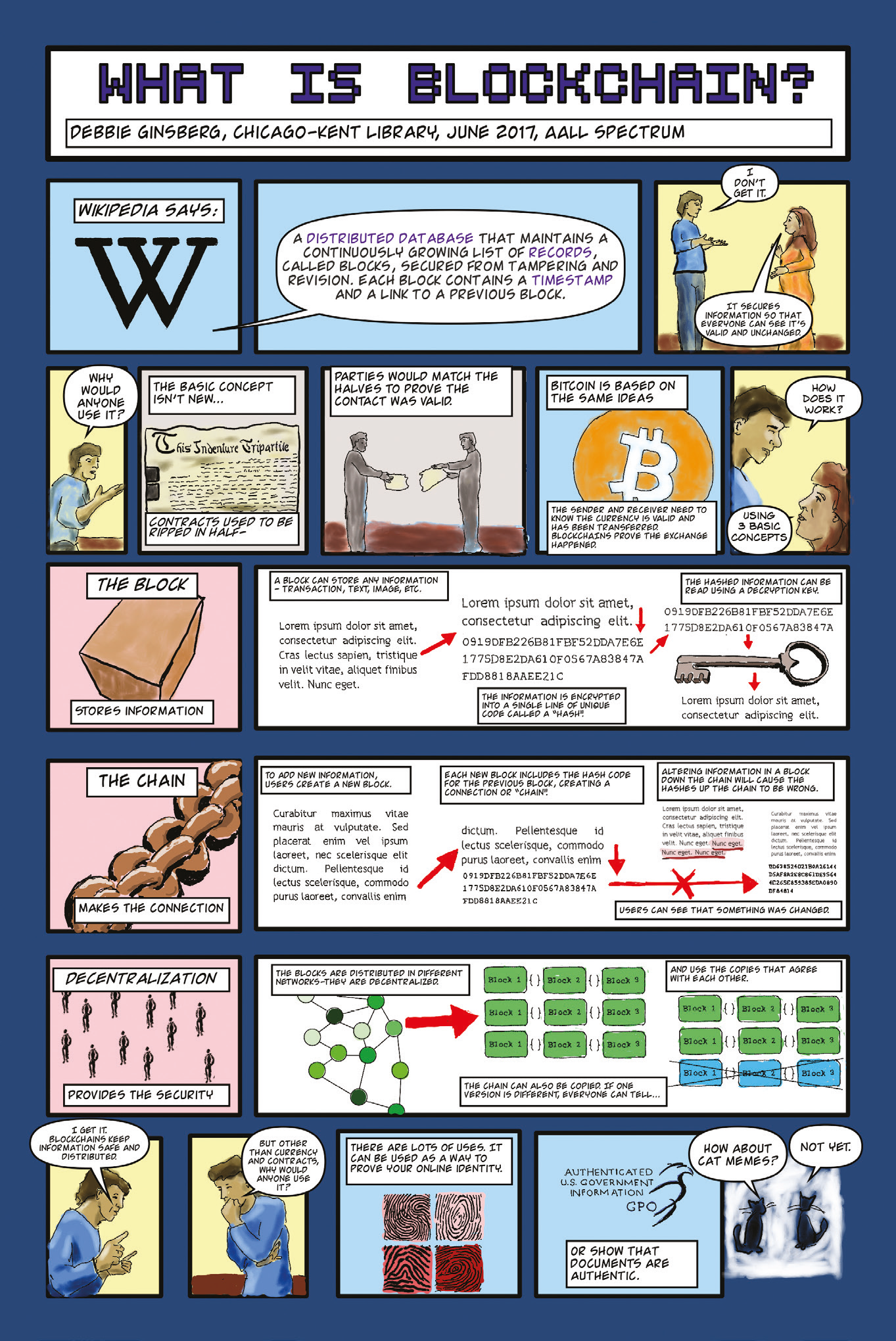

By Debbie Ginsberg

By Debbie Ginsberg