By Andrew White

By Andrew White

As a wave of new technology surges forward, law tries to keep up with the surge’s negative ripple effects. But is the law up to the task of regulating deep fakes? Recent advances in artificial intelligence have made it possible to create from whole-cloth videos and audio which make it appear that subjects in the video have done or said things they really have not. These puppet-like videos are called deep fakes.

Deep fakes are most commonly created with GAN artificial intelligence algorithms, which function by bouncing existing images of the intended target back and forth until a life-like video puppet is created, or they succeed in overlaying an individual’s face onto an existing video. These videos may be used to further political agendas.

For example, this video, created by a French AIDS charity, falsely depicts President Trump declaring an end to the AIDS crisis. While not technically deep fakes, other types of political altered media have been met with viral success on social media. This manipulated video, which seemingly represents the Speaker of the House as drunk and incoherent on the job, quickly circulated Facebook and Twitter, even caught a retweet from Rudy Giuliani. Finally, deep fake videos have also been used to create revenge-porn by scorned ex-partners.

Danielle Citron, a Professor of Law at Boston University, suggested in her testimony before the House Permanent Select Committee on Intelligence that a combination of legal, technological, and societal efforts is the best solution to the misuse of deep fakes:

“[w]e need the law, tech companies, and a heavy dose of societal resilience to make our way through these challenges.”

Google is working to improve their technology to detect deep fakes. Facebook, Microsoft, the Partnership on AI, and Amazon have teamed up to create the Deep fake Detection Challenge. Twitter is actively collecting survey responses to gauge how users of its platform would like to see deep fakes handled, whether through outright removal of deep fake videos, labelling deep fake videos, or alerting when users are about to share a deep fake video. There also have been efforts in the technology world to curb the influence of altered media and deep fake videos on the user-side. Users may inquire into the media which they see on their own.

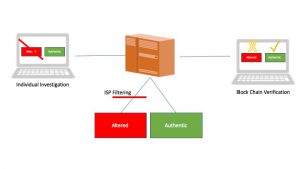

Three mechanisms of technological block chain regulation. By Andrew White 2019.

For example, this algorithm tracks subtle head movements to detect whether a video is real or fake. The Department of Defense has created another algorithm which tracks eye blinking of subjects in videos to compare with bona fide videos. Deep fakes are becoming so well-crafted, though, that there may come a time where they cannot be reliably detected. Other methods have been developing alongside advances in artificial intelligence, such as the use of blockchain verification to establish the provenance of videos and audio before they are posted.

From a legal perspective, legislatures have begun to realize the impact which deep fakes have on American’s political and sexual autonomy. The federal government is working on legislation to require the Department of Homeland Security to research the status and effects of deep fakes. Legislation restricting the distribution of deep fakes has already been passed in various states, but as the statutes demonstrate, it may be more difficult than anticipated to truly impact the influx of deep fakes.

Texas, in enacting S.B. no. 751, targets deep fakes whose creators’ intent is to influence the outcome of an election. This broad statute criminalizes the creation or distribution of a deep fake video with the intent to influence an election or injure a candidate within 30 days of the election. Interestingly, the Texas legislature specified that a “deep fake video [is a] video created with artificial intelligence [depicting] a real person performing an action that did not occur in reality.” This area of law is rapidly evolving, and where the contours of this law lie have not been clearly established. For example, it is not clear whether the altered video of Nancy Pelosi would be included in this bill. In the Pelosi video, the video was slowed down, and the pitch of the speech was raised to make it appear that the slowed voice was actually Nancy Pelosi. These material alterations weren’t created with artificial intelligence. In addition, Pelosi did actually speak the words and in the same order as the altered video. Would this fall under the statute’s proscription of videos where the subject is “performing an action that did not occur in reality”?

A recent Virginia statute targets a different category of deep fakes: revenge porn. S.B. no. 1736 adds the phrase “including a falsely created videographic or still image” to the existing revenge porn statute. This broader language seems to include those pornographic likenesses that are created by GAN (generative adversarial networks) or other algorithms. Would this bill protect a video which contains a likeness created to look like a victim, but due to a minor difference (such as a missing or added tattoo) makes the likeness different enough to fall outside the protection of the statute?

A similar cause of action was added to California law by A.B. no. 602, which was signed into law by Governor Newsom in October, 2019. This statute adds a private right of action to the existing revenge porn statute for victims who have been face- or body-swapped into a recording of a sexual act which is published without their consent.

California also passed AB 730 alongside the revenge porn amendment. This law disallows the distribution of any “deceptive audio or visual media … with the intent to injure the candidate’s reputation or to deceive a voter” within 60 days of an election. The law defines “materially deceptive audio or visual media” as that which “would falsely appear to a reasonable person to be authentic and would cause a reasonable person to have a fundamentally different understanding . . . than that person would have if the person were hearing or seeing the unaltered, original version of the image or audio or video recording.”

This law also has a notable exception, which is that it does not apply to newspapers or other news media, nor does the law apply to paid campaign ads. These exceptions may serve to undermine the entire purpose of the bill, as Facebook has publicly asserted that it will not verify the truth or falsehood of political ads purchased on their platform.

Finally, traditional tort law may allow for recovery in certain situations where state statutes fail. The torts of intentional infliction of emotional distress, defamation, and false light all could apply, depending on the fact situations. These redresses, though, may only provide monetary damages and not the removal of the video itself. The problem with applying tort law in the deep fake context is similar to the limitations of AB 730. Finding the creator of a deep fake, and then proving the creator’s intent may be a Herculean task. After finding the creator, it is difficult to mount a full civil case against them. Even if you do manage to bring a cause of action against a deep fake creator, the damage may already have been done.

The area of AI and deep fakes is a rapidly evolving one, both from a technological and a legal perspective. The coming together of technology and law to combat the dark side of advances in artificial intelligence is encouraging, even as technology rushes forward to realize the more positive effects of artificial intelligence. It seems, then, that the only solution to the problem of deep fakes is a combination of legal and technological remedies, and, in the words of Danielle Citron, “a heavy dose of societal resilience.”

Andrew White is a 1L Research Fellow at the Institute for Science, Law & Technology at IIT Chicago-Kent College of Law. Andrew received his Master of Science in Law from Northwestern Pritzker School of Law and his Bachelor of Science from the University of Michigan, where he studied Cellular and Molecular Biology and French and Francophone Studies.

By Michael Goodyear

By Michael Goodyear